我们去图书馆的时候,会直接去自己喜欢的分类栏目找寻书籍。如果其中的分类不是很细致的话,想找某一本书还是有一些困难的。同样的如果我们获取了一些图书的数据,原始的文件里各种数据混杂在一起,非常不利于我们的查找和使用。所以今天小编教大家如何用python爬虫中scrapy给图书分类,大家一起学习下:

spider抓取程序:

在贴上代码之前,先对抓取的页面和链接做一个分析:

网址:http://category.dangdang.com/pg4-cp01.25.17.00.00.00.html

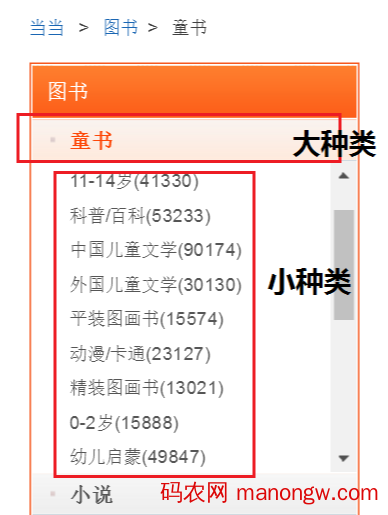

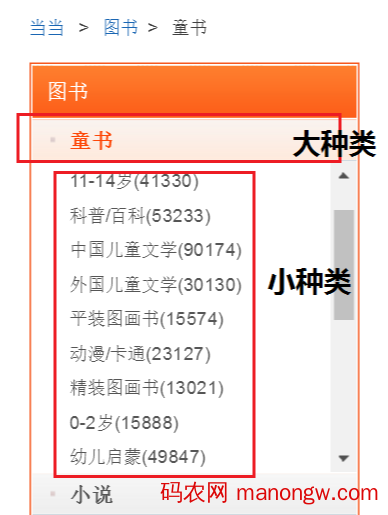

这个是当当网图书的链接,经过分析发现:大种类的id号对应 cp01.25 中的25,小种类对应id号中的第三个 17,pg4代表大种类 —>小种类下图书的第17页信息。

为了在抓取图书信息的同时找到这本图书属于哪一大种类下的小种类的归类信息,我们需要分三步走,第一步:大种类划分,在首页找到图书各大种类名称和对应的id号;第二步,根据大种类id号生成的链接,找到每个大种类下的二级子种类名称,及对应的id号;第三步,在大种类 —>小种类的归类下抓取每本图书信息。

分步骤介绍下:

1、我们继承RedisSpider作为父类,start_urls作为初始链接,用于请求首页图书数据

# -*- coding: utf-8 -*-

import scrapy

import requests

from scrapy

import Selector

from lxml

import etree

from ..items

import DangdangItem

from scrapy_redis

.spiders

import RedisSpider

class DangdangSpider(RedisSpider

):

name

= dangdangspider

redis_key

= dangdangspider:urls

allowed_domains

= [“dangdang.com”]

start_urls

= http://category.dangdang.com/cp01.00.00.00.00.00.html

def start_requests(self

):

user_agent

= Mozilla

/5.0 (Windows NT

6.1; WOW64

) AppleWebKit

/537.36 (KHTML

, like Gecko

) Chrome

/49.0.2623.22

Safari

/537.36 SE

2.X MetaSr

1.0

headers

= {User-Agent: user_agent

}

yield scrapy

.Request

(url

=self

.start_urls

, headers

=headers

, method

=GET, callback

=self

.parse

)

2、在首页中抓取大种类的名称和id号,其中yield回调函数中传入的meta值为本次匹配出的大种类的名称和id号

def parse(self

, response

):

user_agent

= Mozilla

/5.0 (Windows NT

6.1; WOW64

) AppleWebKit

/537.36 (KHTML

, like Gecko

) Chrome

/49.0.2623.22

Safari

/537.36 SE

2.X MetaSr

1.0

headers

= {User-Agent: user_agent

}

lists

= response

.body

.decode

(gbk)

selector

= etree

.HTML

(lists

)

goodslist

= selector

.xpath

(//*[@id=”leftCate”]/ul/li)

for goods

in goodslist

:

try:

category_big

= goods

.xpath

(a/text()).pop

().replace

(,) # 大种类

category_big_id

= goods

.xpath

(a/@href).pop

().split

(.)[1] # id

category_big_url

= “http://category.dangdang.com/pg1-cp01.{}.00.00.00.00.html”.

format(str(category_big_id

))

# print(“{}:{}”.format(category_big_url,category_big))

yield scrapy

.Request

(url

=category_big_url

, headers

=headers

,callback

=self

.detail_parse

,

meta

={“ID1”:category_big_id

,“ID2”:category_big

})

except Exception

:

Pass

3、根据传入的大种类的id号抓取每个大种类下的小种类图书标签,yield回调函数中传入的meta值为大种类id号和小种类id号

def detail_parse(self

, response

):

ID1

:大种类ID ID2

:大种类名称 ID3

:小种类ID ID4

:小种类名称

url

= http://category.dangdang.com/pg1-cp01.{}.00.00.00.00.html.format(response

.meta

[“ID1”])

category_small

= requests

.get

(url

)

contents

= etree

.HTML

(category_small

.content

.decode

(gbk))

goodslist

= contents

.xpath

(//*[@class=”sort_box”]/ul/li[1]/div/span)

for goods

in goodslist

:

try:

category_small_name

= goods

.xpath

(a/text()).pop

().replace

(” “,“”).split

(()[0]

category_small_id

= goods

.xpath

(a/@href).pop

().split

(.)[2]

category_small_url

= “http://category.dangdang.com/pg1-cp01.{}.{}.00.00.00.html”.

format(str(response

.meta

[“ID1”]),str(category_small_id

))

yield scrapy

.Request

(url

=category_small_url

, callback

=self

.third_parse

, meta

={“ID1”:response

.meta

[“ID1”],

“ID2”:response

.meta

[“ID2”],“ID3”:category_small_id

,“ID4”:category_small_name

})

# print(“============================ {}”.format(response.meta[“ID2”])) # 大种类名称

# print(goods.xpath(a/text()).pop().replace(” “,””).split(()[0]) # 小种类名称

# print(goods.xpath(a/@href).pop().split(.)[2]) # 小种类ID

except Exception

:

Pass

4、抓取各大种类——>小种类下的图书信息

def third_parse(self

,response

):

for i

in range(1,101):

url

= http://category.dangdang.com/pg{}-cp01.{}.{}.00.00.00.html.format(str(i

),response

.meta

[“ID1”],

response

.meta

[“ID3”])

try:

contents

= requests

.get

(url

)

contents

= etree

.HTML

(contents

.content

.decode

(gbk))

goodslist

= contents

.xpath

(//*[@class=”list_aa listimg”]/li)

for goods

in goodslist

:

item

= DangdangItem

()

try:

item

[comments] = goods

.xpath

(div/p[2]/a/text()).pop

()

item

[title] = goods

.xpath

(div/p[1]/a/text()).pop

()

item

[time] = goods

.xpath

(div/div/p[2]/text()).pop

().replace

(“/”, “”)

item

[price] = goods

.xpath

(div/p[6]/span[1]/text()).pop

()

item

[discount] = goods

.xpath

(div/p[6]/span[3]/text()).pop

()

item

[category1] = response

.meta

[“ID4”] # 种类(小)

item

[category2] = response

.meta

[“ID2”] # 种类(大)

except Exception

:

pass

yield item

except Exception

:

pass

到此这篇关于python爬虫scrapy图书分类实例讲解的文章就介绍到这了。